Artificial Intelligence Tool BERT

DESCRIPTION

BERT, which stands for Bidirectional Encoder Representations from Transformers, is a groundbreaking artificial intelligence model developed by Google in 2018. This tool is primarily designed for natural language processing (NLP) tasks, allowing machines to understand human language with remarkable accuracy. Employs a transformer architecture that processes words in relation to all the other words in a sentence, rather than one by one in order. This bidirectional understanding enables BERT to grasp the context of a word based on its surrounding words, leading to a nuanced interpretation of language that was previously unattainable with traditional models.

One of the key functionalities of BERT is its ability to handle context-dependent word meanings, or polysemy. For instance, in the sentence “The bank can refuse to lend money,” the word “bank” can have different meanings depending on the context. BERT’s architecture allows it to analyze both the preceding and following words to derive the correct interpretation. This capability is particularly impactful in applications such as search engines, where understanding user queries accurately can significantly enhance the relevance of search results and improve user satisfaction.

The practical impact of BERT has been profound, revolutionizing various industries reliant on language comprehension. In customer service, for instance, BERT’s ability to interpret and respond to inquiries accurately can streamline support interactions, reducing the workload on human agents. Additionally, has been integrated into tools for sentiment analysis, enabling businesses to gauge public opinion on products or services more effectively. By leveraging BERT, organizations can achieve a deeper understanding of customer sentiments and preferences, ultimately leading to better decision-making and improved customer relations.

Why choose BERT for your project?

BERT (Bidirectional Encoder Representations from Transformers) excels in understanding context, making it ideal for tasks like sentiment analysis and question answering. Its bidirectional approach allows it to grasp nuances in language, improving accuracy in text classification and entity recognition. Transfer learning capabilities enable fine-tuning on specific datasets, enhancing performance with minimal data. Practical use cases include chatbots that provide more relevant responses, search engines that deliver precise results, and content moderation tools that identify harmful language. Additionally, integrates seamlessly with existing NLP frameworks, making it accessible for developers aiming to elevate their projects with advanced language understanding.

How to start using BERT?

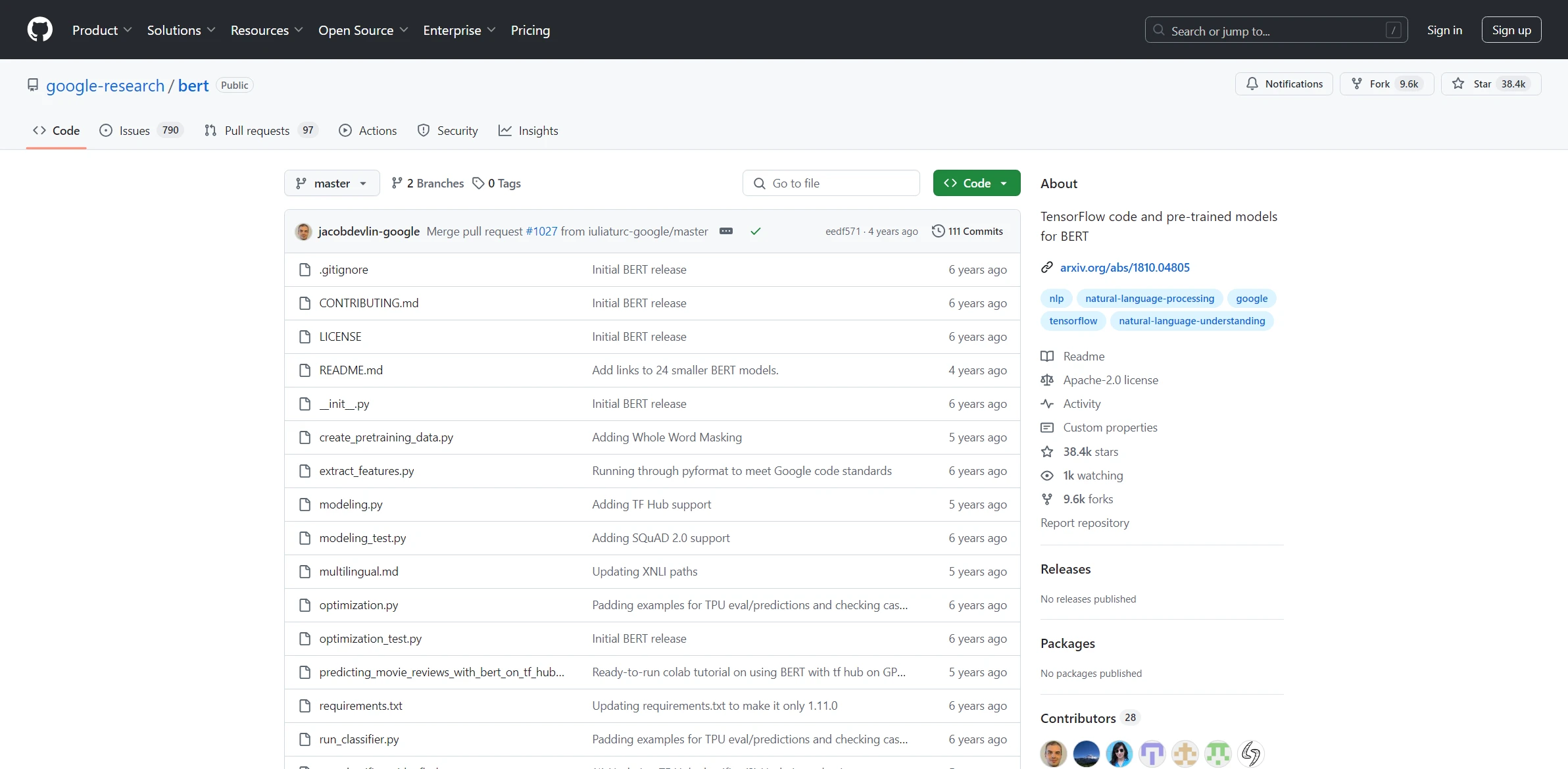

- Install the necessary libraries and dependencies, such as TensorFlow or PyTorch, to run the tool.

- Download a pre-trained model from the Hugging Face Model Hub or other sources.

- Preprocess your text data by tokenizing it using the BERT tokenizer, ensuring it is in the correct format.

- Feed the preprocessed data into the model to make predictions or extract features.

- Post-process the model’s output, interpreting the results based on your specific use case, such as classification or named entity recognition.

PROS & CONS

Superior understanding of context, allowing for more nuanced interpretations of language.

Superior understanding of context, allowing for more nuanced interpretations of language. Enhanced performance in tasks like question answering and sentiment analysis compared to traditional models.

Enhanced performance in tasks like question answering and sentiment analysis compared to traditional models. Ability to process text bidirectionally, leading to better comprehension of complex sentence structures.

Ability to process text bidirectionally, leading to better comprehension of complex sentence structures. Fine-tuning capabilities enable customization for specific applications, improving relevance and accuracy.

Fine-tuning capabilities enable customization for specific applications, improving relevance and accuracy. Extensive pre-training on diverse datasets, resulting in a robust knowledge base for various language tasks.

Extensive pre-training on diverse datasets, resulting in a robust knowledge base for various language tasks. Requires significant computational resources, making it less accessible for smaller organizations.

Requires significant computational resources, making it less accessible for smaller organizations. Can struggle with understanding context in highly nuanced or ambiguous language.

Can struggle with understanding context in highly nuanced or ambiguous language. May produce biased outcomes if trained on unbalanced datasets.

May produce biased outcomes if trained on unbalanced datasets. Limited ability to handle real-time data processing compared to some other models.

Limited ability to handle real-time data processing compared to some other models. Requires extensive fine-tuning and specialized knowledge to achieve optimal performance.

Requires extensive fine-tuning and specialized knowledge to achieve optimal performance.

USAGE RECOMMENDATIONS

- Understand the architecture and how it differs from traditional models.

- Utilize pre-trained models for specific tasks to save time and resources.

- Fine-tune on your domain-specific dataset for better performance.

- Experiment with different hyperparameters during training to optimize results.

- Use for tasks like text classification, named entity recognition, and question answering.

- Incorporate into your existing NLP pipeline seamlessly.

- Leverage BERT’s ability to handle context by using it for sentence similarity tasks.

- Monitor resource consumption as BERT can be resource-intensive.

- Explore transfer learning techniques to enhance model performance on smaller datasets.

- Stay updated with the latest research and advancements in BERT and its variants.